For a side project I needed to scrape data for the NYSE Composite Index going back as far as possible.

The most convenient data source is on Yahoo! Finance.

What is the NYSE Composite Index?

The NYSE Composite Index is an index that includes all common stock listed on the New York Stock Exchange. The contribution of each stock to the index (its weight) is determined by its free-float market capitalisation (value of shares available for public trading).

Scraping

I considered doing the scrape with either R or Python. After looking at the structure of the site and tabulated data I decided to go with R.

Libraries

Start by loading a few indispensable libraries.

library(httr2)

library(rvest)

library(dplyr)

library(readr)

library(janitor)

What purpose do these serve?

httr2— HTTP requests (retrieve the web page HTML content);rvest— parsing HTML (extracting content from the HTML);dplyr— data manipulation;readr— for theparse_number()function and easy CSV writing; andjanitor— cleaning up column names.

Query Parameters

There are two query parameters, period1 and period2 that determine the duration of the data returned. These should both be set to integral values, specifically the number of seconds since the UNIX epoch. We could hard-code these but I prefer to generate them dynamically.

Here’s how we do that for the start date (31 December 1965):

as.integer(as.POSIXct("1965-12-31"))

[1] -126316800

_ The value is negative because that date is before the UNIX epoch (1 January 1970).

Create a list with the appropriate parameter values.

params <- list(

period1 = as.integer(as.POSIXct("1965-12-31")),

period2 = as.integer(Sys.time())

)

Headers & Cookies

There’s just one mandatory header required, which is the User Agent string.

headers <- list(

"User-Agent" = "Mozilla/5.0 (X11; Linux x86_64)"

)

There are also a number of cookies, only one of which appears to be important. The content of that cookie appears to evolve over time, so it will require refreshing. Get the cookies and put them into a cookies list.

Request

Now we have all of the prerequisites in place. I’ll store the URL in a variable rather than hard coding into the request.

URL <- "https://finance.yahoo.com/quote/^NYA/history/"

Now build the request using {httr2}. The modular approach to this is really nice. Unpack the lists as function parameters using the !!! (bang-bang-bang) syntax, which, for the benefit of Pythonistas, is equivalent to using ** on a dictionary.

response <- request(URL) |>

req_url_query(!!!params) |>

req_cookies_set(!!!cookies) |>

req_headers(!!!headers) |>

req_perform()

Parse & Wrangle

Next we take the response object and extract the HTML body. Then a quick bit of web scraping (using html_element() and html_table() to locate the <table> and transform its content into a data frame).

The resulting column names are not to my liking. A combination of clean_names() followed by some specific renaming does the trick though.

Finally a mutation to clean up the data:

- convert the dates into standard format (there is no world where month, day, year makes sense!);

- handle missing

volumevalues; - use the

parse_number()function to parse numbers (handles the commas); and - scales

volumeby 1000 (because all values end in “000”).

table <- response |>

resp_body_html() |>

html_element("table") |>

html_table() |>

clean_names() |>

rename_with(~ gsub("_adjusted.*", "", .x)) |>

rename(

close = close_close_price

) |>

mutate(

date = as.Date(strptime(date, "%b %d, %Y")),

volume = ifelse(volume == "-", NA, volume),

across(.cols = -date, .fns = ~ parse_number(.)),

across(.cols = open:close_adj, .fns = ~ sprintf("%.2f", .)),

volume = volume / 1000

) %>%

arrange(date)

Let’s take a look at the fruits of this labour. The oldest data doesn’t have values for volume.

head(table)

date open high low close adj_close volume

<date> <chr> <chr> <chr> <chr> <chr> <dbl>

1 1965-12-31 528.69 528.69 528.69 528.69 528.69 NA

2 1966-01-03 527.21 527.21 527.21 527.21 527.21 NA

3 1966-01-04 527.84 527.84 527.84 527.84 527.84 NA

4 1966-01-05 531.12 531.12 531.12 531.12 531.12 NA

5 1966-01-06 532.07 532.07 532.07 532.07 532.07 NA

6 1966-01-07 532.60 532.60 532.60 532.60 532.60 NA

The new data is complete and up to date.

tail(table, 10)

date open high low close adj_close volume

<date> <chr> <chr> <chr> <chr> <chr> <dbl>

1 2024-10-14 19711.22 19834.18 19690.54 19814.55 19814.55 3005250

2 2024-10-15 19814.55 19814.55 19669.80 19678.71 19678.71 3882120

3 2024-10-16 19678.71 19838.29 19678.71 19825.49 19825.49 3467230

4 2024-10-17 19825.49 19901.49 19825.49 19855.26 19855.26 3480010

5 2024-10-18 19855.26 19905.65 19808.99 19884.81 19884.81 3374490

6 2024-10-21 19884.81 19884.81 19690.32 19720.32 19720.32 3407010

7 2024-10-22 19720.32 19720.32 19579.84 19664.58 19664.58 3342080

8 2024-10-23 19653.36 19653.36 19493.42 19594.24 19594.24 3532650

9 2024-10-24 19594.24 19620.02 19496.22 19560.73 19560.73 3543030

10 2024-10-25 19560.73 19648.74 19438.80 19456.27 19456.27 3501280

Persist

Save it to a CSV file.

write_csv(table, "nyse-composite.csv")

You can grab the CSV file here (not updated).

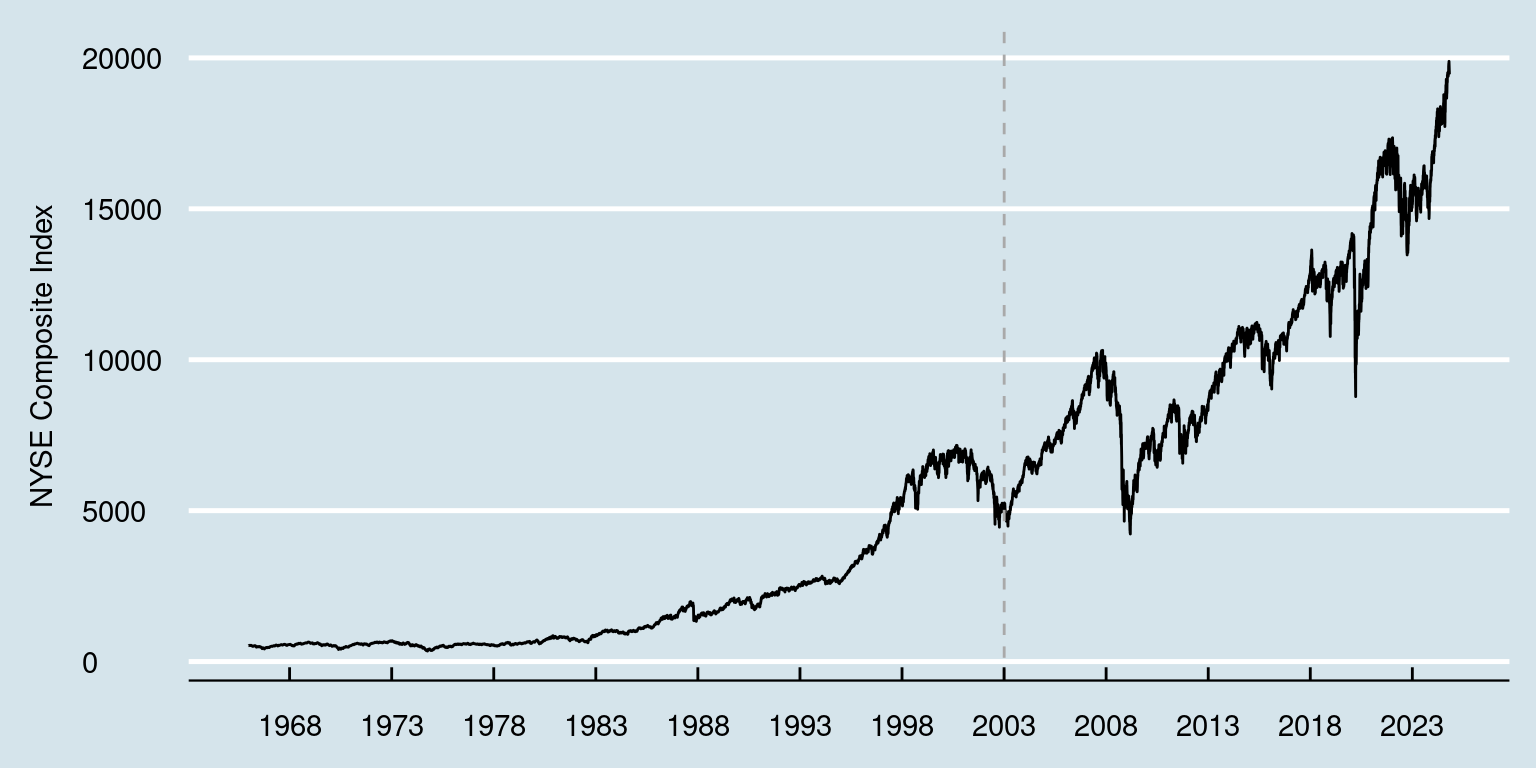

Visualise

Finally let’s create a quick visualisation of the data, just to be sure that it looks legit.

Here’s the corresponding plot for the last decade from Google Finance. The vertical dashed line in the above plot indicates the initial date (3 January 2003) in the plot below.