I’ve recently been given early access to a service that provides data on job listings published by a wide range of companies. The dataset offers a near real-time view of hiring activity, broken down at the company level. This is a potentially valuable signal for tracking labour market trends, gauging corporate growth or powering job intelligence tools.

The data are currently available via

- a summary of current job counts broken down by company (CSV)

- a simple API which gives access to

- job count histories and

- details of individual job posts.

Other things that are currently in development:

- custom dashboards and

- packages for accessing the data from either R or Python.

Let’s take a look at what’s available right now.

Historical Job Counts

Using a series of the CSV summary files I was able to easily reconstruct the history of job counts for a selection of companies.

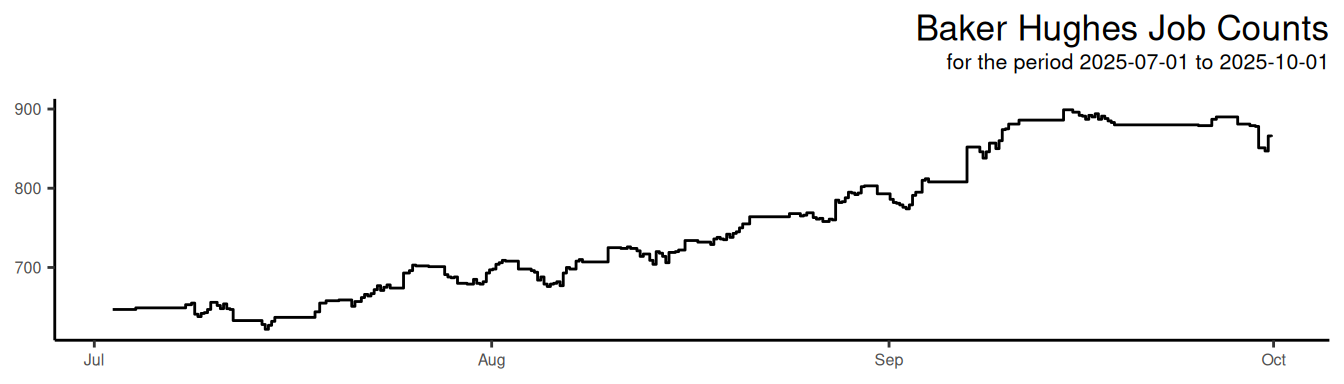

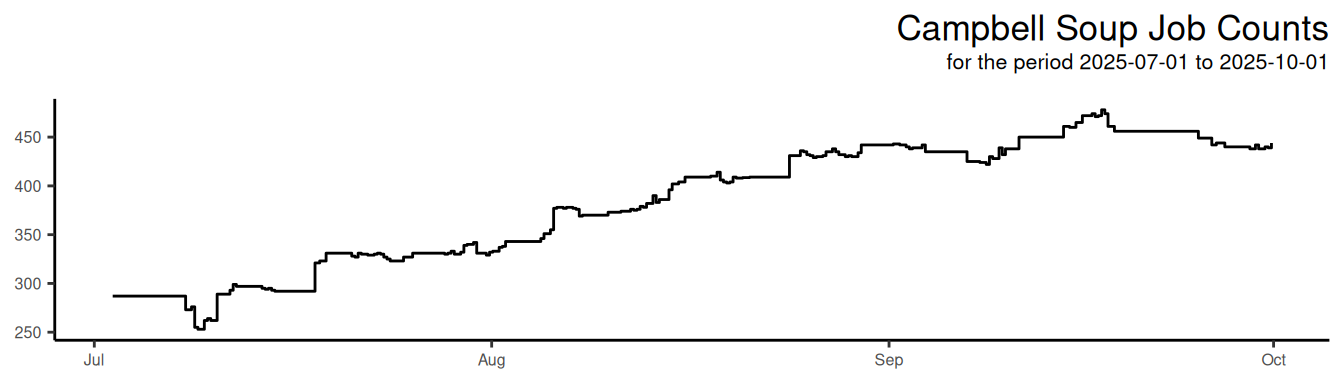

I’ve included plots for four companies below. The first two, Baker Hughes and Campbell’s (of soup fame), are both either in a growth phase or have relatively high employee turnover. These are large companies (with sizes of approximately 57000 and 13700 employees respectively), so the numbers of jobs listed do not represent a significant proportion of their current workforce.

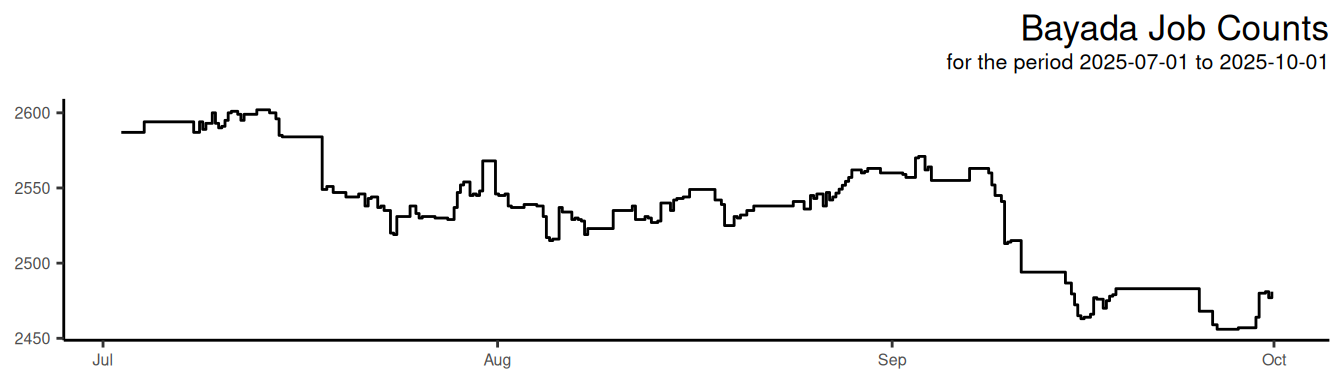

The other two companies, Bayada and GE Vernova, have more stable job count numbers, but both have periods where the number of open jobs are either growing or contracting over shorter time scales.

Granularity

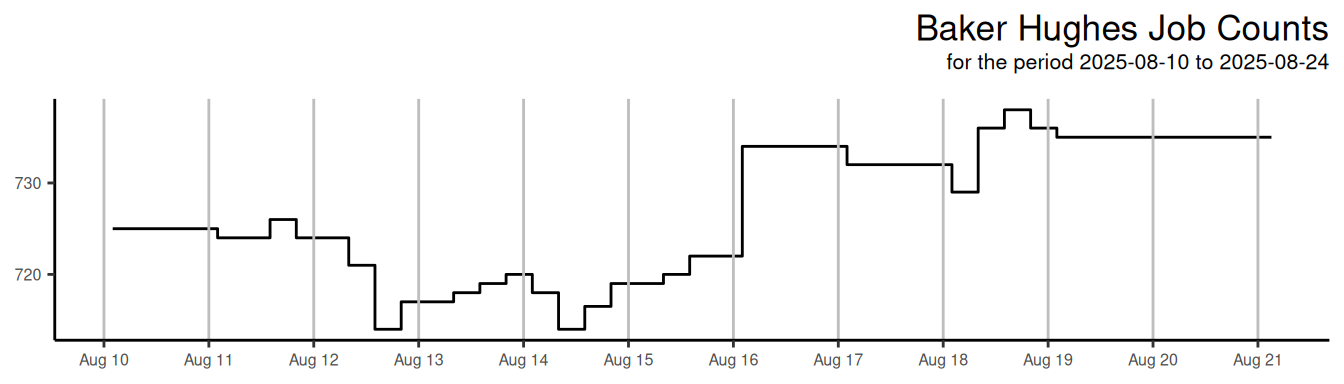

The service is still experimenting with the granularity of the data. Depending on your application this might be more or less of an issue. I think that the minimum acceptable granularity would be daily. However, they have been evaluating update periods of 3, 6 and 12 hours as well. If an application relies on prompt updates then the 3 hour granularity should suffice.

Zooming in on a two week period of the Baker Hughes data it’s evident that there are periods where the data are being updated every 6 hours, but also times where it’s only daily.

This will stabilise soon once the service commits to a specific cadence. It’s hard to guess what that might be, but 3 or 6 hours should be sufficient for most applications.

Job Listing Details

Details of individual job posts are available via the API. Here’s an example. The fields currently include the job title, company name, job posting URL, location, date it was published and the full description text. Apparently other fields will be added, but will not be universally populated due to the available information not being consistent across all job sites.

{

"title": "Licensed Practical Nurse - Home Health Visits",

"company": "BAYADA Home Health Care",

"url": "https://jobs.bayada.com/en/jobs?gh_jid=8207832002",

"location": "Catonsville, Maryland",

"published": "2025-10-10 17:55:17",

"description": "**BAYADA Home Health Care** is looking for a compassionate and dedicated **Licensed Practical Nurse (LPN)** to join our team in our **Baltimore, MD office** on a Per Diem basis. This office services our **adult clients** on a **per visit basis** in homes throughout **West Baltimore County.** \n\nCurrent home health experience is required for per diem. \n\nOne year prior clinical experience as a licensed Practical Nurse is required. \n\nAs a home care nurse, you will be an integral member of a multi-disciplinary health care team that provides skilled nursing and rehabilitative care to clients, affording them the opportunity to receive the medical care required to remain at home."

}

One thing that really excites me about this is that the job description is converted from HTML to Markdown. This will make the content a lot easier to store and digest. 📌 I have truncated the description field for brevity, but have verified that the full description is completely consistent with what’s listed on the original job post.

If you’re simply interested in job counts then these data are not of great interest. However if you’re doing deeper analysis then this is invaluable.

Conclusion

My understanding is that this is going to be a bespoke service. Unlike other similar offerings, for example LinkUp and Coresignal, which provide broad, standardized datasets across many companies and roles. This service appears to be more narrowly tailored, focusing on specific companies, curated coverage, and potentially more reliable and timely data. The goal seems to be higher quality and precision, rather than scale, which could make it especially valuable for targeted analysis or custom integrations.