I’m busy experimenting with Spark. This is what I did to set up a local cluster on my Ubuntu machine. Before you embark on this you should first set up Hadoop.

- Download the latest release of Spark here.

- Unpack the archive.

tar -xvf spark-2.1.1-bin-hadoop2.7.tgz

- Move the resulting folder and create a symbolic link so that you can have multiple versions of Spark installed.

sudo mv spark-2.1.1-bin-hadoop2.7 /usr/local/

sudo ln -s /usr/local/spark-2.1.1-bin-hadoop2.7/ /usr/local/spark

cd /usr/local/spark

Also add SPARK_HOME to your environment.

export SPARK_HOME=/usr/local/spark

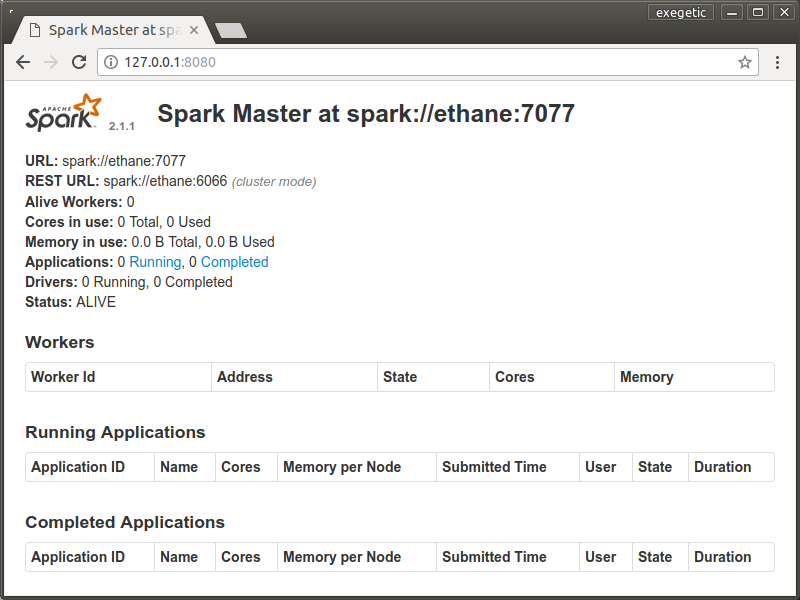

- Start a standalone master server. At this point you can browse to 127.0.0.1:8080 to view the status screen.

$SPARK_HOME/sbin/start-master.sh

- Start a worker process.

$SPARK_HOME/sbin/start-slave.sh spark://ethane:7077

To get this to work I had to make an entry for my machine in /etc/hosts:

127.0.0.1 ethane

- Test out the Spark shell. You’ll note that this exposes the native Scala interface to Spark.

$SPARK_HOME/bin/spark-shell

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.1.1

/_/

Using Scala version 2.11.8 (OpenJDK 64-Bit Server VM, Java 1.8.0_131)

Type in expressions to have them evaluated.

Type :help for more information.

scala> println("Spark shell is running")

Spark shell is running

scala>

To get this to work properly it might be necessary to first set up the path to the Hadoop libraries.

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/hadoop/lib/native

- Maybe Scala is not your cup of tea and you’d prefer to use Python. No problem!

$SPARK_HOME/bin/pyspark

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 2.1.1

/_/

Using Python version 2.7.13 (default, Jan 19 2017 14:48:08)

SparkSession available as 'spark'.

>>>

Of course you’ll probably want to interact with Python via a Jupyter Notebook, in which case take a look at this.

- Finally, if you prefer to work with R, that’s also catered for.

$SPARK_HOME/bin/sparkR

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.1.1

/_/

SparkSession available as 'spark'.

> spark

Java ref type org.apache.spark.sql.SparkSession id 1

>

This is a light-weight interface to Spark from R. To find out more about {sparkR}, check out the documentation here. For a more user friendly experience you might want to look at sparklyr.

- When you are done you can shut down the slave and master Spark processes.

$SPARK_HOME/sbin/stop-slave.sh

$SPARK_HOME/sbin/stop-master.sh